MIT6.828-Lab4

introduction

In this lab you will implement preemptive multitasking among multiple simultaneously active user-mode environments.

In part A you will add multiprocessor support to JOS, implement round-robin scheduling, and add basic environment management system calls (calls that create and destroy environments, and allocate/map memory).

In part B, you will implement a Unix-like fork(), which allows a user-mode environment to create copies of itself.

Finally, in part C you will add support for inter-process communication (IPC), allowing different user-mode environments to communicate and synchronize with each other explicitly. You will also add support for hardware clock interrupts and preemption.

Part A: Multiprocessor Support and Cooperative Multitasking

Multiprocessor Support

We are going to make JOS support “symmetric multiprocessing” (SMP), a multiprocessor model in which all CPUs have equivalent access to system resources such as memory and I/O buses. While all CPUs are functionally identical in SMP, during the boot process they can be classified into two types: the bootstrap processor (BSP) is responsible for initializing the system and for booting the operating system; and the application processors (APs) are activated by the BSP only after the operating system is up and running. Which processor is the BSP is determined by the hardware and the BIOS.

A processor accesses its LAPIC using memory-mapped I/O (MMIO). In MMIO, a portion of physical memory is hardwired to the registers of some I/O devices, so the same load/store instructions typically used to access memory can be used to access device registers.

Exercise1: implement mmio_map_region as below:

1 | |

Application Processor Bootstrap

- Before booting up APs, the BSP should first collect information about the multiprocessor system, such as the total number of CPUs, their APIC IDs and the MMIO address of the LAPIC unit.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18void

mp_init(void)

{

struct mp *mp; // mp结构用于指明configure table在哪里

struct mpconf *conf; // mpconf结构表示configure table header

struct mpproc *proc; // mpconfigure table entry

uint8_t *p;

unsigned int i;

bootcpu = &cpus[0];

if ((conf = mpconfig(&mp)) == 0)

return;

ismp = 1;

lapicaddr = conf->lapicaddr;

// 遍历configure table,确定boot cpu,可用cpu数

for (p = conf->entries, i = 0; i < conf->entry; i++) {

............. FUNC::boot_aps()copies the AP entry code (kern/mpentry.S) to0x7000.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26// Start the non-boot (AP) processors.

static void

boot_aps(void)

{

extern unsigned char mpentry_start[], mpentry_end[];

void *code;

struct CpuInfo *c;

// Write entry code to unused memory at MPENTRY_PADDR

code = KADDR(MPENTRY_PADDR);

memmove(code, mpentry_start, mpentry_end - mpentry_start);

// Boot each AP one at a time

for (c = cpus; c < cpus + ncpu; c++) {

if (c == cpus + cpunum()) // We've started already.

continue;

// Tell mpentry.S what stack to use

mpentry_kstack = percpu_kstacks[c - cpus] + KSTKSIZE;

// Start the CPU at mpentry_start

lapic_startap(c->cpu_id, PADDR(code));

// Wait for the CPU to finish some basic setup in mp_main()

while(c->cpu_status != CPU_STARTED)

;

}

}

‘Cause in the boot.S, its LMA is equal to its VMA cause of it’s in the real-mode, but here in the kern/mpentry.S, it’s in the protected-mode, so it needs MPBOOTPHYS to turn VMA into LMA

Per-CPU State and Initialization

When writing a multiprocessor OS, it is important to distinguish between per-CPU state that is private to each processor, and global state that the whole system shares.

- Per-CPU kernel stack

Because multiple CPUs can trap into the kernel simultaneously, we need a separate kernel stack for each processor to prevent them from interfering with each other’s execution. The arraypercpu_kstacks[NCPU][KSTKSIZE]reserves space for NCPU’s worth of kernel stacks.

code here:1

2

3

4int i;

for (i = 0; i < NCPU; i++) {

boot_map_region(kern_pgdir, KSTACKTOP - KSTKSIZE - (KSTKSIZE + KSTKGAP) * i, KSTKSIZE, PADDR(percpu_kstacks[i]), PTE_W);

} - Per-CPU TSS and TSS descriptor

A per-CPU task state segment (TSS) is also needed in order to specify where each CPU’s kernel stack lives. The TSS for CPU i is stored incpus[i].cpu_ts, and the corresponding TSS descriptor is defined in the GDT entrygdt[(GD_TSS0 >> 3) + i]. Note thatFUNC::trap_init_percpuis called by different cpu1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19// LAB 4: Your code here:

cprintf("%d CPU is calling trap_init_percpu()\n", thiscpu->cpu_id);

struct Taskstate *thists = &thiscpu->cpu_ts;

thists->ts_esp0 = KSTACKTOP - thiscpu->cpu_id * (KSTKSIZE + KSTKGAP);

thists->ts_ss0 = GD_KD;

thists->ts_iomb = sizeof(struct Taskstate);

// Initialize the TSS slot of the gdt.

gdt[(GD_TSS0 >> 3) + thiscpu->cpu_id] = SEG16(STS_T32A, (uint32_t) (thists),

sizeof(struct Taskstate) - 1, 0);

gdt[(GD_TSS0 >> 3) + thiscpu->cpu_id].sd_s = 0;

// Load the TSS selector (like other segment selectors, the

// bottom three bits are special; we leave them 0)

ltr(GD_TSS0+ (thiscpu->cpu_id << 3));

// Load the IDT

lidt(&idt_pd); - Per-CPU systen registers

All registers, including system registers, are private to a CPU. Therefore, instructions that initialize these registers, such aslcr3(),ltr(),lgdt(),lidt(), etc., must be executed once on each CPU.

Locking

Our current code spins after initializing the AP in mp_main(). Before letting the AP get any further, we need to first address race conditions when multiple CPUs run kernel code simultaneously. The simplest way to achieve this is to use a big kernel lock. The big kernel lock is a single global lock that is held whenever an environment enters kernel mode, and is released when the environment returns to user mode. In this model, environments in user mode can run concurrently on any available CPUs, but no more than one environment can run in kernel mode; any other environments that try to enter kernel mode are forced to wait.

1 | |

A: We still need separate kernel stacks for each CPU because there even though only one can run at a time, if we have a shared kernel stack, when a processor enters the kernel, it pushes a trap frame. When it leaves, it pops the trap frame. If any other process entered between this time, the trap prace the process is popping when leaving will not be it’s own, but the process of another trap frame that entered the stack. Thus it will not re-enter at the correct location.

Round-Robin Scheduling

Change the JOS kernel so that it can alternate between multiple environments in “round-robin” fashion.

- The function

sched_yield()in the newkern/sched.cis responsible for selecting a new environment to run. It picks the first environment it finds with a status of ENV_RUNNABLE (see inc/env.h), and calls env_run() to jump into that environment.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16// LAB 4: Your code here.

int i;

if (curenv) {

for (i = ENVX(curenv->env_id) + 1; i != ENVX(curenv->env_id); i = (i + 1) % NENV) {

if (envs[i].env_status == ENV_RUNNABLE)

env_run(envs + i);

}

if (curenv->env_status != ENV_NOT_RUNNABLE)

env_run(curenv);

}

else {

for (i = 0; i < NENV; ++i)

if (envs[i].env_status == ENV_RUNNABLE)

env_run(envs + i);

}

A: ‘Cause in FUNC::env_setup_vm, we already have copied PDE above UTOP from kern_pgdir to env_pgdir, and only difference is PDE which relates to env_pgidr itself.

A: when switching from kernel mode to user mode, hardware, trapentry.S, and _alltrap cooperate to store the stack frame into tf, and restore it by FUNC::env_pop_tf.

System Calls for Environment Creation

Although your kernel is now capable of running and switching between multiple user-level environments, it is still limited to running environments that the kernel initially set up. You will now implement the necessary JOS system calls to allow user environments to create and start other new user environments.

We will implement these primitive system calls to allow a Unix-like fork():

sys_exofork: This system call creates a new environment with an almost blank slate: nothing is mapped in the user portion of its address space, and it is not runnable. The new environment will have the same register state as the parent environment at the time of thesys_exoforkcall. Note that we have to seteaxregister to make sureexoforkwill return 0 in the child environment1

2

3

4

5

6

7

8

9

10struct Env* new_env;

int ret;

envid_t parent_id = curenv->env_id;

if ((ret = env_alloc(&new_env, parent_id)) == 0) {

new_env->env_status = ENV_NOT_RUNNABLE;

new_env->env_tf = curenv->env_tf;

ret = new_env->env_id;

new_env->env_tf.tf_regs.reg_eax = 0;

}

return ret;sys_env_set_status: explained by its name1

2

3

4

5

6struct Env *env;

int ret;

if ((ret = envid2env(envid, &env, 1)) == 0) {

env->env_status = status;

}

return ret;sys_page_alloc: Allocates a page of physical memory(The page’s contents are set to 0) and maps it at a given virtual address in a given environment’s address space.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18struct Env *env;

int ret;

if ((ret = envid2env(envid, &env, 1)) == 0) {

if ((uint32_t)va >= UTOP || (uint32_t)va % PGSIZE != 0 || (perm & (~PTE_SYSCALL)) != 0) {

ret = -E_INVAL;

return ret;

}

struct PageInfo *new_page = page_alloc(ALLOC_ZERO);

if (new_page == NULL) {

ret = -E_NO_MEM;

return ret;

}

ret = page_insert(env->env_pgdir, new_page, va, perm);

if (ret == -E_NO_MEM) {

page_free(new_page);

}

}

return ret;sys_page_map(envid_t srcenvid, void *srcva, envid_t dstenvid, void *dstva, int perm): let the new and the old mappings both refer to the same page of physical memory.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27struct Env *srcenv;

struct Env *dstenv;

struct PageInfo *pg;

pte_t *pte;

int ret = 0;

if (envid2env(srcenvid, &srcenv, 1) < 0 || envid2env(dstenvid, &dstenv, 1) < 0) {

ret = -E_BAD_ENV;

return ret;

}

if ((uint32_t)srcva >= UTOP || (uint32_t)srcva % PGSIZE != 0 ||

(uint32_t)dstva >= UTOP || (uint32_t)dstva % PGSIZE != 0 ||

(perm & (~PTE_SYSCALL)) != 0) {

ret = -E_INVAL;

return ret;

}

pg = page_lookup(srcenv->env_pgdir, srcva, &pte);

if (pg == NULL) {

ret = -E_INVAL;

return ret;

}

if ((perm & PTE_W) && (*pte & PTE_W) == 0) {

ret = -E_INVAL;

return ret;

}

ret = page_insert(dstenv->env_pgdir, pg, dstva, perm);

return ret;sys_page_unmap: explained by its name1

2

3

4

5

6

7

8

9

10

11

12int ret = 0;

struct Env* env;

if (envid2env(envid, &env, 1) < 0) {

ret = -E_BAD_ENV;

return ret;

}

if ((uint32_t)va >= UTOP || (uint32_t)va % PGSIZE != 0) {

ret = -E_INVAL;

return ret;

}

page_remove(env->env_pgdir, va);

return ret;

dumbfork.c

this dumbfork is a simple example, it does not implement COW.

First, it set new environment status to ENV_NOT_RUNNABLE, copy parent’s trapframe to the new one to make it look like call dumbfork, most importantly, set eax register to zero, thus in child environment, dumbfork will return zero.

1 | |

Second, copy entire user address space from parent to child, which begins from UTEXT to end(it means end address of user program set by user.ld just like kernel.ld).

One more thing, the value of end can be found in obj/user/dumbfork.sym.

1 | |

Last, copy user stack which just takes one page into child environment.

1 | |

About duppage, it first uses sys_page_alloc to allocate physical page in child environment, then uses sys_page_map to map this new-allocated page from UTEMP in parent environment, what’s more, uses memmove to copy user program at UTEXT into UTEMP, lastly, uses sys_page_unmap to unmap physical page at UTEMP in parent environment.

1 | |

Part B: Copy-on-Write Fork

However, a call to fork() is frequently followed almost immediately by a call to exec() in the child process, which replaces the child’s memory with a new program. This is what the the shell typically does, for example. In this case, the time spent copying the parent’s address space is largely wasted, because the child process will use very little of its memory before calling exec().

To do this, on fork() the kernel would copy the address space mappings from the parent to the child instead of the contents of the mapped pages, and at the same time mark the now-shared pages read-only. When one of the two processes tries to write to one of these shared pages, the process takes a page fault. At this point, the Unix kernel realizes that the page was really a “virtual” or “copy-on-write” copy, and so it makes a new, private, writable copy of the page for the faulting process. This optimization makes a fork() followed by an exec() in the child much cheaper: the child will probably only need to copy one page (the current page of its stack) before it calls exec().

In the next piece of this lab, you will implement a “proper” Unix-like fork() with copy-on-write, as a user space library routine. Implementing fork() and copy-on-write support in user space has the benefit that the kernel remains much simpler and thus more likely to be correct.

User-level page fault handling

FUNC::set_pgfault_handler

It allocates a page for the user exception stack. Then, it calls sys_env_set_pgfault_upcall to store the assembly pgfault entrypoint defined in lib/pfentry.S. Then it sets the user-mode global variable _pgfault_handler to point to our custom page fault handler function. This global variable _pgfault_handler is used by the assembly code inside pfentry.S itself.

1 | |

During a page fault occurs(FUNC::page_fault_handler)

- When a page fault occurs, execution jumps to the kernel mode. the kernel starts executing

page_fault_handler, which detects that we page faulted from user mode.1

2

3if ((tf->tf_cs & 3) == 1) {

panic("a page fault in kernel mode");

} - Then the kernel verifies if that environment has a page fault upcall (

curenv->env_pgfault_upcall), and that the environment has its user exception stack mapped (as previously mentioned, memory has been mapped there by set_pgfault_handler).1

2// 检验用户异常栈是否映射好

user_mem_assert(curenv, (const void *)utf, sizeof(struct UTrapframe), PTE_W); - The kernel copies all register values from the trap frame to the user exception stack, taking into account whether the environment page faulted from the user stack or elsewhere.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15// 处理递归发生异常的情况

// 发生异常时,用户环境已经在用户异常堆栈上运行,应该在当前tf->tf_esp下启动新的堆栈帧

if (tf->tf_esp < UXSTACKTOP && tf->tf_esp >= UXSTACKTOP-PGSIZE)

// 递归发生异常,则在异常栈上空出4字节后在处理

utf = (struct UTrapframe *)(tf->tf_esp - sizeof(struct UTrapframe) -4);

else

// 首次发生异常,应该在UXSTACKTOP启动新的堆栈帧

utf = (struct UTrapframe *)(UXSTACKTOP - sizeof(struct UTrapframe));

// 设置异常栈帧

utf->utf_fault_va = fault_va;

utf->utf_err = tf->tf_err; // 缺页中断的错误码,有硬件自动压入到trapframe上

utf->utf_regs = tf->tf_regs;

utf->utf_eflags = tf->tf_eflags;

utf->utf_eip = tf->tf_eip;

utf->utf_esp = tf->tf_esp; - The kernel modifies the trap frame of the environment so it actually returns to the page fault upcall instead of returning back to where the page fault occured. This is done by modifying the trap frame instruction pointer and setting it equal to the address of the page fault upcall (which is written in assembly), and the stack pointer to point to the user exception stack.

1

2

3

4// 设置中断返回的两个重要寄存器, esp 和 eip

// 中断返回时的用户程序的栈在UXSTACKTOP下,而EIP指向pfentry.S的__pgfault_upcall

tf->tf_esp = (uintptr_t)utf;

tf->tf_eip = (uintptr_t)curenv->env_pgfault_upcall;

PageFault Entry

- When a page fault actually occurs, the kernel switches our ESP to point to the user exception stack if we’re not already on the user exception stack, and then it pushes a UTrapframe onto our user exception stack:

1

2

3

4

5

6

7

8

9// trap-time esp

// trap-time eflags

// trap-time eip

// utf_regs.reg_eax

// ...

// utf_regs.reg_esi

// utf_regs.reg_edi

// utf_err (error code)

// utf_fault_va <-- %esp - After the trap frame is popped, execution is in the assembly code, which calls our custom page fault handler and then later cleans up after itself.

1

2

3

4

5// Call the C page fault handler.

pushl %esp // function argument: pointer to UTF

movl _pgfault_handler, %eax

call *%eax

addl $4, %esp // pop function argument - push the trap-time

%eiponto the trap-time stack!1

2

3

4

5addl $8, %esp // 忽略fault_va和err

movl 0x20(%esp), %eax // trap-time eip移入eax寄存器

subl $4, 0x28(%esp) // 将trap-time 栈首下移4字节用于保存eip

movl 0x28(%esp), %ebx // 将trap-time 栈首地址移入ebx寄存器

movl %eax, (%ebx) // 将eip存入trap-time的栈中 - clean it self

1

2

3

4

5

6

7

8

9// Restore the trap-time registers.

popal

// Restore eflags from the stack.

add $4, %esp // 忽略eip

popfl // restore eflags寄存器

// Switch back to the adjusted trap-time stack.

popl %esp

// Return to re-execute the instruction that faulted.

ret

why do user/faultalloc and user/faultallocbad behave differently?

Although page_fault_handler in them is same, one uses cprintf() and the other uses sys_cput().

- about

cprintf(), it callsvprintfmt, which will try to visitPARAMETER::appassed to it. Eventually, it will invoke a page fault.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25// lib/printf.c

int

vcprintf(const char *fmt, va_list ap)

{

struct printbuf b;

b.idx = 0;

b.cnt = 0;

vprintfmt((void*)putch, &b, fmt, ap);

sys_cputs(b.buf, b.idx);

return b.cnt;

}

int

cprintf(const char *fmt, ...)

{

va_list ap;

int cnt;

va_start(ap, fmt);

cnt = vcprintf(fmt, ap);

va_end(ap);

return cnt;

} - about

sys_cput(), it finally callssys_cputs()inkern/syscall.cafter interrupt for system calls. Eventually, it will encounter the check byuser_mem_assert, so it will have no chance to invoke a page fault.1

2

3

4

5

6

7

8

9

10

11static void

sys_cputs(const char *s, size_t len)

{

// Check that the user has permission to read memory [s, s+len).

// Destroy the environment if not.

// LAB 3: Your code here.

user_mem_assert(curenv, s, len, PTE_U);

// Print the string supplied by the user.

cprintf("%.*s", len, s);

}

Implementing Copy-on-Write Fork

Difference between COWFork and dumbfork

- while

dumbfork()copied pages,fork()will initially only copy page mappings. page_fault_handlerneed to be set in both parent and child.

Implement

fork()

- The parent installs

pgfault()as the C-level page fault handler - The parent calls

sys_exofork()to create a child environment. - For each writable or copy-on-write page in its address space below

USTACKTOP, the parent calls duppage

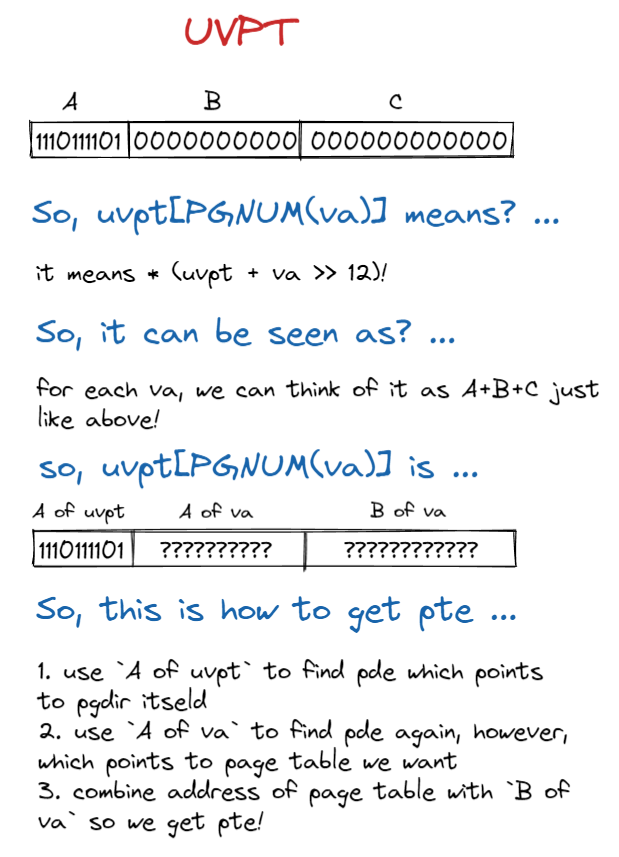

a cleaver mapping trick to access pde, pte in the user space.

- set

pgfault_handlerfor child and set childRUNNABLE

1 | |

duppage()

- map the page copy-on-write into the address space of the child and then remap the page copy-on-write in its own address space. And for those pages not writable and not copy-on-write, just map them readable into the address space of the child.

1 | |

pgfault()

- Check that the faulting access was (1) a write, and (2) to a copy-on-write page. If not, panic.

- Allocate a new page, map it at a temporary location (PFTEMP), copy the data from the old page to the new page, then move the newpage to the old page’s address.

1 | |

Costly lesson

when I finish all this above, user exception stack just overflows after 1000 generate 1001 environment! I am so confused cause others’ code is not helpful on my machine. Fault is below:[00001001] user_mem_check assertion failure for va eebfefcc

This is how I fix this:

- print “page fault” in

page_fault_handleroftrap.c

we will find page fault was invoked immediately after a new environment created, up to 30 times. So, I guess maybe it’s caused by page freed wrongly cause mechanism of invoking a page fault is decided by hardware. So let’s guess what caused a wrong free? - print “page ref” in

region_allocofenv.c

‘causeregion_allocallocates most pages that a user program needs, unsurprisely, ref of all pages allocated is 0! - reason

Instead of usingpage_insert, I implementregion_allocusingpgdir_walkto find pte and then assign right value to it, which causes all pages allocated have 0 on their ref, finally contributing to freed wrongly.

Part C: Preemptive Multitasking and Inter-Process communication(IPC)

In the final part of lab 4 you will modify the kernel to preempt uncooperative environments and to allow environments to pass messages to each other explicitly.

Clock Interrupts and Preemption

In order to allow the kernel to preempt a running environment, forcefully retaking control of the CPU from it, we must extend the JOS kernel to support external hardware interrupts from the clock hardware.

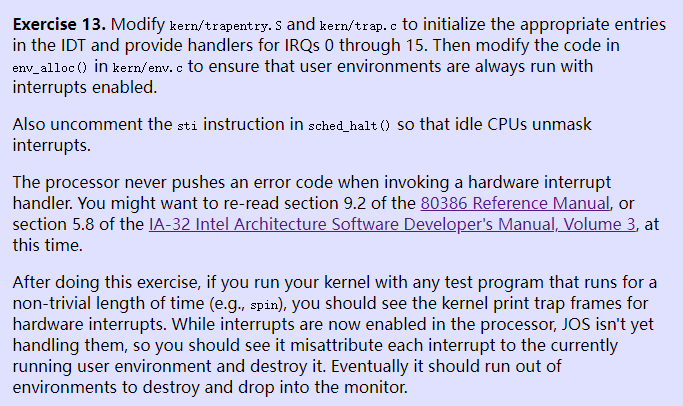

Interrupt Discipline

External interrupts (i.e., device interrupts) are referred to as IRQs. In inc/trap.h, IRQ_OFFSET is defined to be decimal 32. Thus the IDT entries 32-47 correspond to the IRQs 0-15. For example, the clock interrupt is IRQ 0. Thus, IDT[IRQ_OFFSET+0] (i.e., IDT[32]) contains the address of the clock’s interrupt handler routine in the kernel.

1 | |

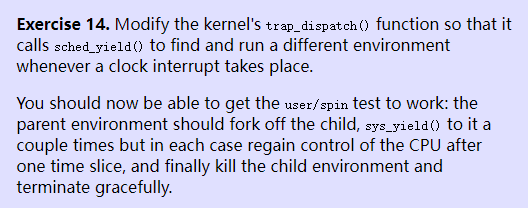

Handling Clock Interrupts

1 | |

Inter-Process communication (IPC)

We’ve been focusing on the isolation aspects of the operating system, the ways it provides the illusion that each program has a machine all to itself. Another important service of an operating system is to allow programs to communicate with each other when they want to. It can be quite powerful to let programs interact with other programs.

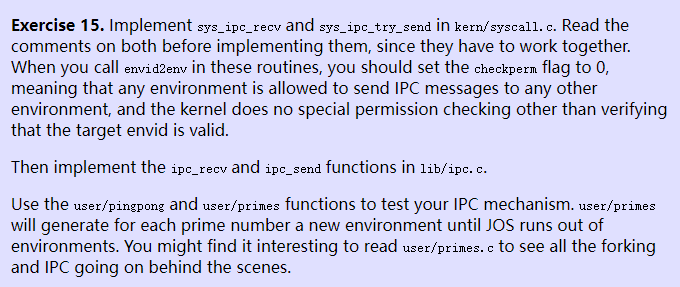

IPC in JOS

You will implement two system calls, sys_ipc_recv and sys_ipc_try_send. Then you will implement two library wrappers ipc_recv and ipc_send.

The “messages” that user environments can send to each other using JOS’s IPC mechanism consist of two components: a single 32-bit value, and optionally a single page mapping. Allowing environments to pass page mappings in messages provides an efficient way to transfer more data than will fit into a single 32-bit integer, and also allows environments to set up shared memory arrangements easily.

Sending and Receiving Messages

To receive a message, an environment calls sys_ipc_recv. This system call de-schedules the current environment and does not run it again until a message has been received.

To try to send a value, an environment calls sys_ipc_try_send with both the receiver’s environment id and the value to be sent. If the named environment is actually receiving (it has called sys_ipc_recv and not gotten a value yet), then the send delivers the message and returns 0. Otherwise the send returns -E_IPC_NOT_RECV to indicate that the target environment is not currently expecting to receive a value.

A library function ipc_recv in user space will take care of calling sys_ipc_recv and then looking up the information about the received values in the current environment’s struct Env.

Similarly, a library function ipc_send will take care of repeatedly calling sys_ipc_try_send until the send succeeds.

Transferring Pages

When an environment calls sys_ipc_recv with a valid dstva parameter (below UTOP), the environment is stating that it is willing to receive a page mapping. If the sender sends a page, then that page should be mapped at dstva in the receiver’s address space. If the receiver already had a page mapped at dstva, then that previous page is unmapped.

When an environment calls sys_ipc_try_send with a valid srcva (below UTOP), it means the sender wants to send the page currently mapped at srcva to the receiver, with permissions perm.

After any IPC, the kernel sets the new field env_ipc_perm in the receiver’s Env structure to the permissions of the page received, or zero if no page was received.

Implementing IPC

FUNC:sys_ipc_recv: give up current environment’s cpu by using FUNC::sys_yield, let others know it’s waiting for message.

1 | |

FUNC::sys_ipc_try_send: check all condtions and remeber to set dstenv->env_tf.tf_regs.reg_eax to zero otherwise the guy who called sys_ipc_recv will return non-zero and fail.

1 | |

wrapper function: ipc_recv and ipc_send: remeber keep try_send in ipc_send.

1 | |